For the past month or so I have been working with nested JSON data. I wanted to find the fastest strategy for data extraction. My initial attempt was using the tidyverse. I was put off by the time this took to run. Next, I tried using data.table which I am fairly unfamiliar with.

I was reading up on other methods and came across a post from Martin Morgan. He is the author of rjsoncons. His post covered the use of DuckDB for reading JSON which was quite performant.

While sick today I went through the same operation with each approach.

The JSON file I’ll be using is from a NCAA basketball game and is located here. I’m not sure how the performance of these different approaches will vary depending on the structure of the JSON file.

if (!file.exists("boxscore.json")) {

download.file(

"https://data.ncaa.com/casablanca/game/6351263/boxscore.json",

destfile = "boxscore.json"

)

}

{tidyverse} Approach

This is the easiest to understand method in my opinion. It simply reads the JSON file using jsonlite, pulls out some metadata about the teams, pulls out some player statistics, and joins them together. It returns a tibble.

{data.table} Approach

The process here is essentially the same as above. This however returns a data.frame.

data.table_style <- function() {

j <- fromJSON("boxscore.json")

teams <- data.table(j$teams)[, .(

teamId = as.character(teamId),

rbindlist(playerStats)

)]

return(

merge(

j$meta$teams,

teams,

by.x = "id",

by.y = "teamId"

) |>

setnames("id", "teamId")

)

}DuckDB Approach

For DuckDB we need to load up an in-memory database. Also, we have to install and load the JSON extension for DuckDB.

This also returns a data.frame.

duck_style <- function() {

dbGetQuery(

con,

"

with raw as (select meta, teams from 'boxscore.json'),

meta as (select unnest(meta.teams, recursive := true) from raw),

teams_large as (select unnest(teams) as team from raw),

teams as (

select

team.teamId::VARCHAR as teamId,

unnest(team.playerStats, recursive := true)

from teams_large

)

select * exclude meta.id

from meta, teams

where meta.id = teams.teamId

"

)

}Benchmark

results <- microbenchmark::microbenchmark(

duck = duck_style(),

tidy = tidyverse_style(),

dt = data.table_style(),

times = 100

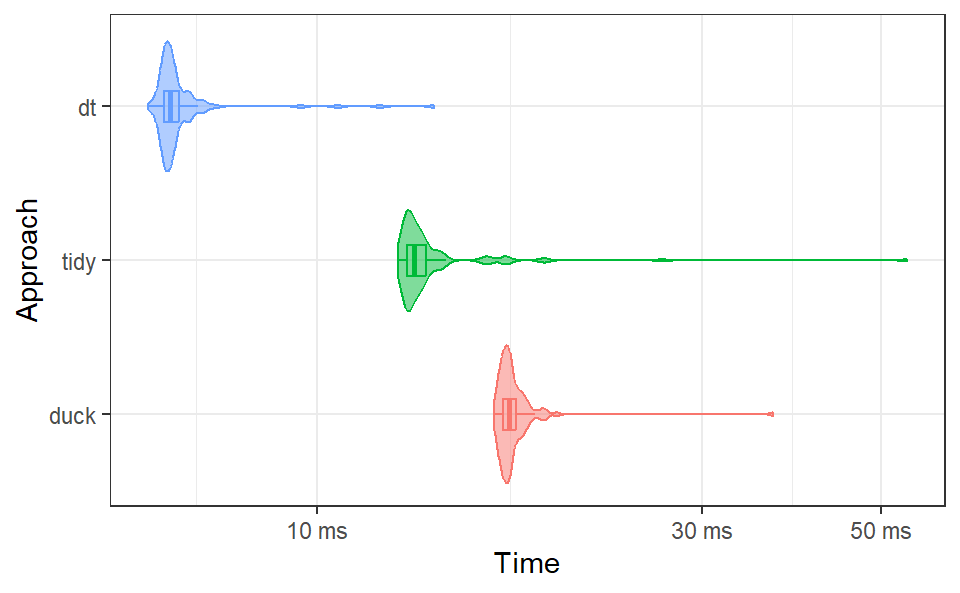

)In my testing with a standard R script, DuckDB came out about 7ms faster than the tidy approach. I’m not sure what the difference is.

We see that data.table is the clear winner here with times below 10ms. DuckDB looks to be the most consistent.

There are probably some more optimizations that I am not seeing, but this is what I can up with in a few hours.